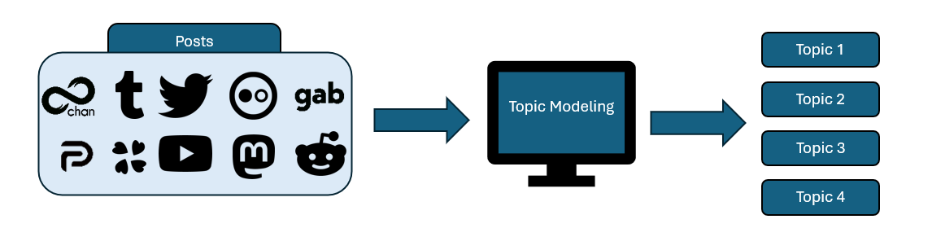

Topic modeling is a type of statistical modeling that extracts topical patterns of text-based data (Egger & Yu, 2022). Typically, within any body of text, users might see a mixture of topics within a corpus or database (Maier et al., 2018; Egger & Yu, 2022). First, topic modeling is applied to sets of documents or posts, then it creates a list of topics or themes that are said to emerge from the data (See Figure 1).

Much like qualitative methods and approaches, it generates a list of topics or themes. However, it is less labor intensive as it is completed computationally rather than manually. Nevertheless, topic modeling is not meant to replace qualitative methods, but it can be used in a complementary way so that the two can inform each other (Andreotta et al., 2019). Historically, topic modeling was created for long-texts. However, with the emergence of social media data and microblogging platforms, there grew a need for topic modeling algorithms that can be applied to short texts as well (Albalawi et al., 2020). To date, there are a variety of topic modeling algorithms that can advance social science research analyses of text-based data, and each algorithm type has unique requirements, assumptions, strengths, and weaknesses (Egger & Yu, 2022). The interpretation and understanding of topic modeling still requires substantial human judgment and domain knowledge that begins with the data pre-processing and the selection of the appropriate algorithms and ends with interpretation of those findings (Albalawi et al., 2020; Hannigan et al., 2019; Egger & Yu, 2022).

In this user guide, we review the use of four well-established topic modeling algorithms that are used across fields of study to provide more context and nuance to text-based analyses (Egger & Yu, 2022). The four that we cover here have been incorporated into our COVID-19 Online Prevalence of Emotions in Institutions Database (COPE-ID) Data Analytics Visualization Tool, including: 1) Latent Semantic Analysis (LSA), 2) Latent Dirichlet Allocation (LDA), 3) Non-Negative Matrix Factorization (NMF), and 4) Bidirectional Encoder Representations from Transformers (BERTopic). Often, each of these are referred to by their acronyms, so we will introduce them once in each section and refer to them by their acronym thereafter. These four algorithms were selected due to their strengths and weaknesses of being better applied to short versus long texts, as well as one that has recently emerged as a state-of-the-art application (BERTopic) for analyzing social media text.

Given that social media data varies in character lengths and other requirements, we wanted users to have a better understanding of what types of topic modeling algorithms can be applied, and how their data and results should be interpreted by human users given the nature and implications of each algorithm type.

1.) Latent Semantic Analysis (LSA)

Latent Semantic Analysis (LSA), one of the most well-known topic modeling algorithms, was first developed and introduced by Dumais et al. (1988) and Deerwester et al. (1990) to solve arising issues with information indexing and retrieval in human-computer interactions (Dumais et al., 1988). LSA begins with Term Frequency-Inverse Document Frequency (TF-IDF) as a weighting technique to identify terms that may be frequently used within a document, or multiple documents, but not across the entire corpus, and assigns these terms more weight (or importance). In other words, TF-IDF is used to identify terms that, even if used relatively rarely across the corpus, may still be important and meaningful to specific documents rather than terms that are common but unimportant. LSA then used Singular Value Decomposition (SVD) to organize the data by recognizing associations among terms within a given body of text and finding themes or broader topics (Madeja & Poruban, 2019). LSA performs best on large text documents with longer text segments and more context (Bradford, 2006; Madeja & Poruban, 2019). While LSA also performs well on medium-length text documents, it struggles to derive meaningful topics from short texts with little to no context (e.g. tweets) (Inzaugarat, 2022). A caveat of using LSA is that the number of topics must be predetermined and choosing too high of a number can result in the production of irrelevant topics while choosing too few can oversimplify the data. The best number of topics for LSA seems to be between seven and ten topics (Madeja & Poruban, 2019). A common practice when using LSA is to preprocess the data by removing stop words (e.g., “the,” “and,” “you,” “I”) and reducing the dimensionality to between 50 – 1000, more commonly to 300 matrices (Sheikha, 2020). A limitation of LSA is the amount of storage space and computing power needed to run it on large text segments. Additionally, for longer text documents, LSA requires long computing time, making it less efficient (Inzaugarat, 2022).

2.) Latent Dirichlet Allocation (LDA)

A well-known unsupervised algorithm, Latent Dirichlet Allocation (LDA), was first introduced by Blei, Ng, & Jordan (2003) to overcome the reliance on linear algebra without considering probabilistic foundations. Thus, they created a generative model that allowed topics to be shared across documents while accounting for probability distributions. That is, this algorithm runs on probability. It automatically clusters words into topics and associates them with other topics. Thus, a document (i.e., collection of words) has a certain probability of distribution over other emerging topics (Paul & Dredze, 2014; Sheikha, 2020). The limitation to this bag-of-words representation is that it neglects semantic relations between words (Grootendorst, 2022).

LDA can apply well to most dataset sizes (Fu et al., 2021). Its application results with good coherency scores for most metrics (Fu et al., 2021; Syed & Spruit, 2017) but its disadvantages are in the number of topics that must be predetermined prior to running the algorithm. This limitation can render less useful topics if an appropriate number is not pre-selected. The number of topics should be selected based on your data size, type, and research objectives. Typically, researchers may select 5-20 topics for small datasets (i.e., hundreds of documents), 20-50 for medium (i.e., thousands of documents), and 50 – 200 or more for large datasets (i.e., thousands or millions of documents). This decision-making process may result in inconsistencies that lead to less useful topics if the decision-making process is not well documented and justified (Fu et al., 2021).

To date, LDA best applies to text considered medium to long in length (Fu et al., 2021; Paul & Dredz, 2014; Sbalchiero & Eder, 2020; Syed & Spruit, 2017). However, in some cases LDA has performed better with short textual data, along with NMF, compared to other algorithms, such as LSA (Albalawi et al., 2020).

Furthermore, because LDA makes some assumptions that social media posts or document, for example, will have some probability distribution over the topics generated in other social media posts; but these topics are inferred rather than observed for input (Paul & Dredze, 2014; Sheikha, 2020). This inference has led some scholars to conclude that its ability to analyze social media data is lacking and needs further testing to locate why this is the case (Egger & Yu, 2021; Sánchez-Franco & Rey-Moreno, 2022; Egger and Yu, 2022). Recall, steps in the decision-making process also involve deciding on the number of topics which may introduce some error into LDA analyses.

3.) Non-Negative Matrix Factorization (NMF)

Non-negative matrix factorization requires that data be preprocessed, and it performs better with shorter text or posts, such as tweets compared to other topic modeling algorithms (Chen et al., 2019). Preprocessing of data for NMF entails lowercasing text, removing stopwords, lemmatizing or stemming, and removal of punctuation and numbers (Egger & Yu, 2022). Like LDA, NMF relies on hyperparameters to extract topics (Egger & Yu, 2022). Thus, researchers must select the optimal number of topics (Egger & Yu, 2021). However, some indicate that NMF may perform more in line with human judgment thus outperforming LDA in general (Egger and Yu, 2022). LDA also has the advantage of TF-IDF weighting rather than relying on raw word frequencies (Albalawi et al., 2020). TF-IDF weighting represents documents based on how frequently a word appears in a single document and how rare the word is across documents. In doing so, it can identify the most relevant words per document which helps locate topics or clusters in the data. However, this weighting process can neglect instances of co-occurrence relations occurring in shorter posts, such as Twitter (Jaradat & Matskin, 2018). Some scholarly research suggests that NMF may not apply as well to noisy or sparse datasets because they often lack enough features for statistical pattern recognition (Cai et al., 2018; Chen et al., 2019). Although other scholars argue that it can analyze noisy data (Blair et al., 2020), whereas other scholars suggest it is possible, but that accuracy of the results cannot be guaranteed (Albalawi et al., 2020; Egger & Yu, 2022). These factors make it difficult for NMF to capture meanings within a corpus, primarily due to convergency issues (Blair et al., 2020; Langville et al., 2014; Egger & Yu, 2022). High coherency scores can be established when the number of topics desired is low. However, when asked to identify too many topics, coherency scores decrease and less useful topics are created (Fu et al., 2021; Langville et al., 2014). High coherency scores suggest that the researcher will be able to evaluate well-defined, consistent, and interpretable topics. Research supports that NMF performs well on short text documents, such as those coming from Twitter, Parler, headlines, or other short-text social media platforms compared to other algorithm types (Athukorala & Mohotti, 2022). To improve coherency scores, matrix factorization has been used to recommend latent features between user interactions and ratings (Obadimu, 2019, p. 2).

4.) Bidirectional Encoder Representations from Transformers (BERTopic)

Initially produced in 2018, Bidirectional Encoder Representations from Transformers (BERT) is a novel large language model that uses an embedding approach. Thus, BERT performs best when applied to short and unstructured texts, making it a promising algorithm for application to social media research (Egger & Yu, 2022). BERT’s embedding approach converts documents into vector representations or embeddings that capture the semantic meaning and context of social media posts as it gains meaning from the context of words (Egger and Yu, 2022; Grootendorst, 2022; Reimers & Gurevych, 2019; Xu et al., 2022). Using the Sentence-BERT (SBERT) Framework, embedding approach can also capture word ordering; without these embeddings, the representation appears as a bag of words. This is one feature that sets BERT apart from the other algorithms (Wang et al., 2020; Galal et al., 2024; Gardazi et al., 2025; Reimers & Gurevych, 2019; Thakur et al., 2020). It is built like transformer models where it can process all input simultaneously compared to Recurrent Neural Networks, which is another type of natural language processing (Tang, 2024). This allows the algorithm to create global topics across time, while not assuming that the temporal nature of the topic is influencing the creation of subsequent topics (Grootendorst, 2022).

BERT is often used in the research and production industries and has become the state of the art for natural language processing as it can represent complex semantic relationships due to being trained with large data (Devlin et al., 2019; Yu et al., 2024). The original BERT model was trained on 800 million words from BooksCorpus and 2.5 billion words from Wikipedia to complete language modeling and sentence prediction (Devlin et al., 2019; Yu et al., 2024). One use case, applied, here is in topic detection, through BERTopic. BERTopic offers a sentence-transformer model for 50 plus languages (Egger & Yu, 2022). This open-source library uses BERT’s model for topic detection using the Term-Frequency – Inverse Document Frequency (TF-IDF) which is an algorithm that is capable of weighting words in a corpus (Sánchez-Franco and Rey-Moreno, 2022; Xu et al., 2022). Thus, the more often a word appears in a document, the more important it is for analytical interpretation purposes (Egger & Yu, 2022). However, this process also accounts for repeat or common words, such as stop words, like “the” compared to other topics that carry more meaning for interpretation. Thus, BERTopic provides more coherent topic representation through its TF-IDF approach to locate meaningful topics of importance (Grootendorst, 2022; Yu et al., 2024). The next three steps pertain to how BERTopic generates topic representation. These include: 1) documents are converted to its embedding representation, 2) dimensionality for those embeddings is reduced to improve the identification of clusters, and 3) topics are extracted using TF-IDF (Grootendorst, 2022, pg. 2).

To best analyze a variety of topics, using an interactive intertopic distance map to inspect the topics can help (Grootendorst, 2020; Egger & Yu, 2022). BERT is also capable of examining longitudinal topic trends in web-based discourse over time (Xu et al., 2022). However, there is a limitation where it can leave out percentages of the corpus when finding incongruent themes (Xu et al., 2022). This is in part due to the fact that BERT assumes a single document only contains one singular topic whereas in reality posts can contain multiple topics (Grootendorst, 2022). Finally, BERT’s primary weakness is due to the number of resources that it requires to perform. If not provided with adequate computational power, the performance of BERT can be impacted, thus highlighting the importance of considering the strengths and weaknesses of other topic modeling algorithms to find the most suitable one for the research task (Gardazi et al, 2025). When interpreting the models, it’s important to remember that researchers can select the most important topics, and that Topic 0 with a –1 indicates outliers are present (Egger & Yu, 2022). Finally, before selecting BERT, it’s important to remember that the original text structure is important when using transformer models, thus this should be performed on unaltered social media text (Egger & Yu, 2022).

Research Summary

Latent Semantic Analysis (LSA), Latent Dirichlet Allocation (LDA), Non-negative Matrix Factorization (NMF), and Bidirectional Encoder Representations from Transformers (BERT/BERTopic) represent four popular approaches to topic modeling, each with unique benefits and limitations brought on by their methodologies.

LSA is the earliest prominent topic modeling technique and, consequently, uses Singular Value Decomposition (SVD) to understand patterns in term co-occurrence. It performs well on large, context-rich documents, but struggles with short and contextually sparse documents, like tweets. LSA requires that the optimal number of topics be predefined, presenting a considerable challenge when implementing the technique – too many topics can create noise, too few topics can oversimplify results. A primary limitation of this technique is its computational demands and inefficiency on large corpora.

LDA, a probabilistic generative model, assumes each document is composed of multiple topics, each characterized by a specific set of words. While effective on medium to long-length texts and diverse dataset sizes, it performs poorly with short or unstructured texts, largely because LDA relies heavily on contextual data, which is limited in these formats. LDA, like LSA, requires that the optimal number of topics be predefined – thus, poorly selecting the number of topics can significantly impact the efficacy of this technique, due to its probabilistic nature. LDA’s bag-of-words assumption limits its ability to understand word order and semantics, making the text length used especially important.

NMF is a matrix factorization method that is commonly paired with TF-IDF weighting. It excels in analyzing short texts but is sensitive to sparse or noisy datasets, which causes issues with topic convergence and coherence, depending on the number of topics requested. This technique is credited with aligning closely to human judgment in topic interpretation, compared to other common techniques. When used carefully, NMF can generate clear and interpretable topics, especially when using short social media data.

BERT, more specifically BERTopic, is a newer class of embedding-based models which capture deep semantic relationships and word order, setting it apart from traditional bag-of-words models, such as LDA. BERT is especially effective on short, unstructured texts, making it ideal for social media analysis. BERTopic becomes attuned for topic modeling by combining BERT embeddings with dimensionality reduction and clustering to derive topics. This approach, paired with its TF-IDF-base representation, results in high interpretability and coherence. These benefits are slightly offset by its computational intensiveness and misalignment with real-world text complexity, as it assumes each document only contains a single topic. Its reliance on preserving the original structure of the text also limits its flexibility in preprocessing.

In sum, assuming adequate computational resources are available, LSA and LDA are generally best suited for longer texts. NMF tends to work well with shorter, more structured data. BERT/BERTopic offers the most novel and semantically rich approach, making it particularly effective for short, noisy, and unstructured text, such as social media data. Ultimately, the best approach to take depends on factors such as text length and structure, available computing power, and current overall research goals.

References

Albalawi, R., Yeap, T. H., & Benyoucef, M. (2020). Using topic modeling methods for short-text data: A comparative analysis. Frontiers in artificial intelligence, 3, 42. https://doi.org/10.3389/frai.2020.00042

Andreotta, M., Nugroho, R., Hurlstone, M. J., Boschetti, F., Farrell, S., Walker, I., & Paris, C. (2019). Analyzing social media data: A mixed-methods framework combining computational and qualitative text analysis. Behavior research methods, 51(4), 1766–1781. https://doi.org/10.3758/s13428-019-01202-8

Athukorala, S., & Mohotti, W. (2022). An effective short-text topic modelling with neighbourhood assistance-driven NMF in Twitter. Social Network Analysis and Mining, 12(1), 89. https://doi.org/10.1007/s13278-022-00898-5

Blair, S.J., Bi, Y. & Mulvenna, M.D. Aggregated topic models for increasing social media topic coherence. Appl Intell 50, 138–156 (2020). https://doi.org/10.1007/s10489-019-01438-z

Blei, D. M., Ng, A. Y., & Jordan, M. I. (2003). Latent dirichlet allocation. Journal of machine Learning research, 3(Jan), 993-1022. http://dx.doi.org/10.7551/mitpress/1120.003.0082

Bradford, R. (2006). Relationship discovery in large text collections using Latent Semantic Indexing. Proceedings of the Fourth Workshop on Link Analysis, Counterterrorism, and Security, 2006.

Chen, Y., Zhang, H., Liu, R., Ye, Z., and Lin, J. (2019). Experimental explorations on short text topic mining between LDA and NMF based Schemes. Knowl. Based Syst. 163, 1–13. doi: 10.1016/j.knosys.2018.08.011

Deerwester, S., Dumais, S. T., Furnas, G. W., Landauer, T. K., & Harshman, R. (1990). Indexing by latent semantic analysis. Journal of the American society for information science, 41(6), 391. https://doi.org/10.1002/(SICI)1097-4571(199009)41:6%3C391::AID-ASI1%3E3.0.CO;2-9

Devlin, J., Chang, M. W., Lee, K., & Toutanova, K. (2019, June). Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 conference of the North American chapter of the association for computational linguistics: human language technologies, volume 1 (long and short papers) (pp. 4171-4186). https://doi.org/10.18653/v1/N19-1423

Dumais, S. T., Furnas, G. W., Landauer, T. K., Deerwester, S., & Harshman, R. (1988, May). Using latent semantic analysis to improve access to textual information. In Proceedings of the SIGCHI conference on Human factors in computing systems (pp. 281-285). https://doi.org/10.1145/57167.57214

Egger, R., and Yu, J. (2021). Identifying hidden semantic structures in Instagram data: a topic modelling comparison. Tour. Rev. 2021:244. https://doi.org/10.1108/TR-05-2021-0244

Egger, R., & Yu, J. (2022). A topic modeling comparison between LDA, NMF, Top2Vec, and BERTopic to demystify Twitter posts. Frontiers in Sociology, 7 (886498). https://doi.org/10.3389/fsoc.2022.886498

Fu, Q., Zhuang, Y., Gu, J., Zhu, Y., & Guo, X. (2021). Agreeing to disagree: Choosing among eight topic-modeling methods. Big Data Research, 23, 100173. https://doi.org/10.1016/j.bdr.2020.100173

Galal, O., Abdel-Gawad, A. H., & Farouk, M. (2024). Rethinking of BERT sentence embedding for text classification. Neural Computing and Applications, 36(32), 20245-20258. https://doi.org/10.1007/s00521-024-10212-3

Gardazi, N. M., Daud, A., Malik, M. K., Bukhari, A., Alsahfi, T., & Alshemaimri, B. (2025). BERT applications in natural language processing: a review. Artificial Intelligence Review, 58(6), 1-49. https://doi.org/10.1007/s10462-025-11162-5

Grootendorst, M. (2022). BERTopic: Neural topic modeling with a class-based TFIDF procedure. arXiv:2203.05794v0571. https://doi.org/10.48550/arXiv.2203.05794

Grootendorst, M. (2024, May). BERTopic: Leveraging BERT and c-TF-IDF to create easily interpretable topics. GitHub, v0.16.2. Retrieved from: https://github.com/MaartenGr/BERTopic

Hannigan, T. R., Haan, R. F. J., Vakili, K., Tchalian, H., Glaser, V. L., Wang, M. S., Kaplan, S., & Jennings, P. D. (2019). Topic modeling in management research: Rendering new theory from textual data. Academy of Management Annals, 13(2), 586-632. https://doi.org/10.5465/annals.2017.0099

Inzaugarat, E. (2022). How to use Latend Semantic Analysis to classify documents. TDS Archive. Available at: https://medium.com/data-science/how-to-use-latent-semantic-analysis-to-classify-documents-1af717e7ee52

Jaradat, S., Matskin, M. (2019). On Dynamic Topic Models for Mining Social Media. In: Agarwal, N., Dokoohaki, N., Tokdemir, S. (eds) Emerging Research Challenges and Opportunities in Computational Social Network Analysis and Mining. Lecture Notes in Social Networks. Springer, Cham. https://doi.org/10.1007/978-3-319-94105-9_8

Langville, A. N., Meyer, C. D., Albright, R., Cox, J., & Duling, D. (2014). Algorithms, initializations, and convergence for the nonnegative matrix factorization (arXiv:1407.7299). arXiv. https://doi.org/10.48550/arXiv.1407.7299

Madeja, M., & Porubän, J. (2019). Accuracy of unit under test identification using Latent Semantic Analysis and Latent Dirichlet Allocation. Proceedings of the IEEE 15th International Scientific Conference on Informatics, 2019, 000161–000166. https://doi.org/10.1109/Informatics47936.2019.9119262

Maier, D., Waldherr, A., Miltner, P., Wiedemann, G., Niekler, A., Keinert, A., … Adam, S. (2018). Applying LDA Topic Modeling in Communication Research: Toward a Valid and Reliable Methodology. Communication Methods and Measures, 12(2–3), 93–118. https://doi.org/10.1080/19312458.2018.1430754

Obadimu, A., Mead, E., Al-Khateeb, S., and Agarwal, N. (2019) A Comparative Analysis of Facebook and Twitter Bots. SAIS 2019 Proceedings. 25. https://aisel.aisnet.org/sais2019/25

Paul MJ, Dredze M (2014) Discovering Health Topics in Social Media Using Topic Models. PLoS ONE 9(8): e103408. https://doi.org/10.1371/journal.pone.0103408

Reimers, N., & Gurevych, I. (2019). Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv preprint arXiv:1908.10084. https://doi.org/10.48550/arXiv.1908.10084

Sánchez‐Franco, M. J., & Rey‐Moreno, M. (2022). Do travelers’ reviews depend on the destination? An analysis in coastal and urban peer‐to‐peer lodgings. Psychology & marketing, 39(2), 441-459. https://doi.org/10.1002/mar.21608

Sbalchiero, S., & Eder, M. (2020). Topic modeling, long texts and the best number of topics. Some Problems and solutions. Quality & Quantity, 54, 1095-1108. https://doi.org/10.1007/s11135-020-00976-w

Sheikha, H. (2020). Text mining Twitter social media for Covid-19: Comparing latent semantic analysis and latent Dirichlet allocation. URN: urn:nbn:se:hig:diva-32567

Syed, S., & Spruit, M. (2017). Full-Text or abstract? Examining topic coherence scores using Latent Dirichlet Allocation. Proceedings of the IEEE International Conference on Data Science and Advanced Analytics, 2017, 165–174. https://doi.org/10.1109/DSAA.2017.61

Tang, Y. (2024). Python Topic Modeling with a BERT Model. Available at: https://deepgram.com/learn/python-topic-modeling-with-a-bert-model. Accessed 22 May 2025.

Thakur, N., Reimers, N., Daxenberger, J., & Gurevych, I. (2020). Augmented SBERT: Data augmentation method for improving bi-encoders for pairwise sentence scoring tasks. arXiv preprint arXiv:2010.08240. https://doi.org/10.18653/v1/2021.naacl-main.28

Wang, B., Shang, L., Lioma, C., Jiang, X., Yang, H., Liu, Q., & Simonsen, J. G. (2020, October). On position embeddings in bert. In International conference on learning representations. https://doi.org/10.48550/arXiv.2404.10518

Xu, Weiai Wayne, et al. (2022). Unmasking the Twitter discourses on masks during the COVID-19 pandemic: User cluster–based BERT topic modeling approach. Jmir Infodemiology 2.2 (2022): e41198. https://doi.org/10.2196/41198

Yu, B., Tang, F., Ergu, D., Zeng, R., Ma, B., & Liu, F. (2024). Efficient classification of malicious urls: M-bert—a modified bert variant for enhanced semantic understanding. Ieee Access, 12, 13453-13468. https://doi.org/10.1109/ACCESS.2024.3357095